This blog is mostly aimed at teachers and those interested in participating in the SSAT EFA programme. If that’s not you though, don’t let it stop you from reading.

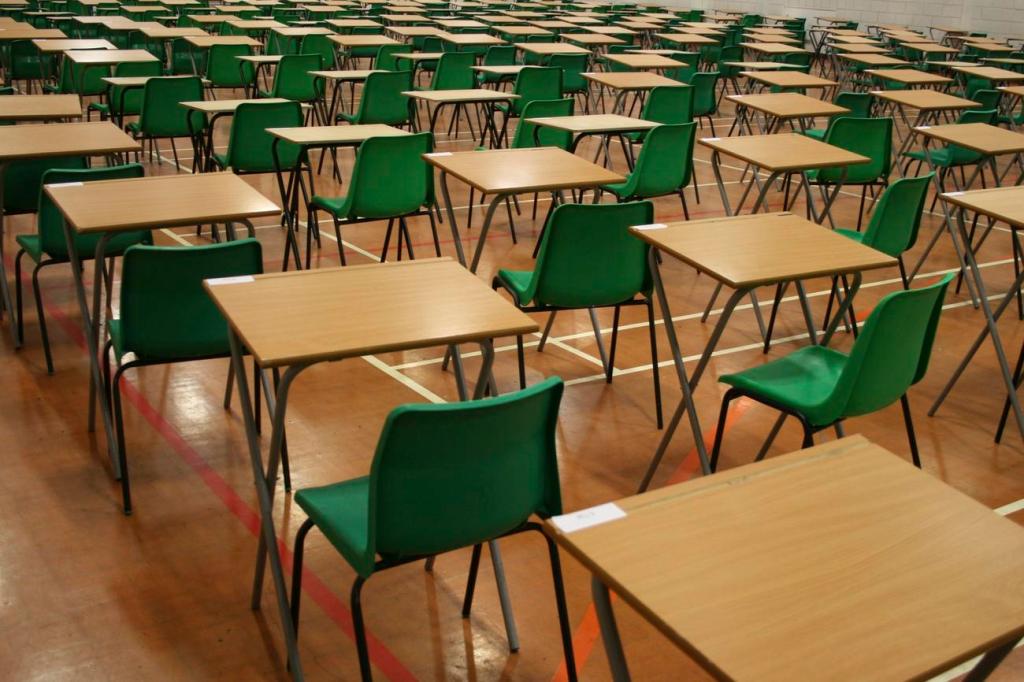

Summative assessments are about reflecting aims. They are high stakes, such as a SAT paper or GCSE, and take place under rigorous conditions at the end of a considerable period of learning; in this way, they are testing the ‘storage strength’ of the material, i.e., how well a student can remember and recall what they learned (Bjork & Bjork, 1992). These assessments sacrifice frequency and utility for high reliability. They frequently judge a candidate against a national standard allowing comparison to others providing broad generalised inferences. In this way, they provide “an accurate shared meaning” (Christodoulou, 2016, p. 84).

However, the grades used to communicate the outcome of a summative assessment always have a degree of error and imprecision because no measuring instrument is perfect. Measurement of ‘invisible’ learning with blunt assessment tools is even harder. It would be more truthful to reflect learning by suggesting a student has achieved within a certain range or to indicate uncertainty using the 95% confidence level rather than provide an absolute percentage (Rose, 2020). They reflect the outcomes of learning episodes that have finished rather than learning that is ongoing, as with formative assessment.

Formative assessment started life as ‘Assessment for Learning (AfL)’ and promised large educational gains, yet the government’s intervention led to a significant unintentional issue; instead of assessment for learning (formative assessment) AfL became an assessment of learning, a summative process (Christodoulou, 2016, p. 21). The significance of this was so great that Dylan Wiliam “wished he had called AfL ‘responsive teaching’, rather than using the word assessment” (Wiliam cited in Christodoulou, 2016, p. 21).

We need to be clear about the information we are trying to gather from any assessment and what we are going to do with that information. If we mix up these purposes of assessment, we achieve neither well, and end up with “generic targets [that] provide the illusion of clarity” (Christodoulou, 2016, p. 96).

Formative assessment is “the process used by teachers and students to recognise and respond to student learning in order to enhance that learning, during the learning” (Cowie & Bell, 1999, p. 32). These assessments tend to be low stakes, such as a diagnostic hinge question or exit ticket; they provide valuable information about the learning and development of understanding going on right now and feature in every lesson. They inform the teacher of misconceptions or immediate errors in understanding and allow for smaller-scale inferences that determine the next steps in teaching; they are all about methods (Christodoulou, 2016, p. 24).

Formative assessment, as we have seen right the way throughout this project, is about judging performance more than judging learning. If you test a student five minutes after they have studied a concept, they are likely to recall much, yet in a week they will have forgotten key points. “Current performance is often a poor proxy for learning which leads us into making erroneous inferences” (Didau & Rose, 2016, p. 101). Students themselves, immediately after completing an activity, will judge their learning higher than a week later because “we are poor judges when we are learning well” (Brown, Roediger, & McDaniel, 2014, p. 3). The inferences I can make from formative assessment are somewhat limited and valid only in the short term but nonetheless useful.

Learning errors can perpetuate poor understanding in any subject and this is particularly true of the more abstract topics known for their common misconceptions. By the time a student has undertaken summative assessment it is often too late to refine their learning, but formative assessment provides an opportunity to test students and respond to gaps in knowledge, understanding, or skills at the point of learning. As Didau & Rose state, “Few tests are bad in and of themselves, it’s what’s done with the data that determines whether they produce useful data” (2016, p. 97); in other words, treat data from assessments with caution.

References

Embedding formative assessment from the EEF – Embedding Formative Assessment | EEF (educationendowmentfoundation.org.uk)

Charles Read Academy – Charles Read Academy – Home

Bjork, R. A., & Bjork, E. L. (1992). A new theory of disuse and an old theory of stimulus

fluctuation. Learning processes to cognitive processes: Essays in honour of William

K. Estes, 2, 35-67. Retrieved from https://bjorklab.psych.ucla.edu/pubs/RBjork_EBjork_1992.pdf

Brown, P. C., Roediger, H. L., & McDaniel, M. A. (2014). Make it Stick. Cambridge, Massachusetts: Harvard University Press.

Christodoulou, D. (2016). Making Good Progress? Oxford: Oxford University Press.

Cowie, B., & Bell, B. (1999). A model of formative assessment in science education. Assessment in Education: Principles Policy and Practice, 6(1), 32-42.

Didau, D., & Rose N. (2016). What every teacher needs to know about psychology. Woodbridge: John Catt.

Rose, N. (2020). Responsive teaching and feedback. Ambition Institute Masters in Expert Teaching, Module 2: Assessment, Sheffield Hallam University.